Fraudsters are finding new ways to steal personal and financial information, and ultimately large sums of cash, due to the rise of artificial intelligence (AI).

In a tearful video on TikTok, Brooke Bush, who uses the handle @babybushwhacked, claims a scammer used AI technology to trick her grandfather into thinking his grandson — her brother — had been killed in a car crash, according to WFAA.

Using AI “voice cloning” technology, fraudsters can trick family members into thinking a loved one is speaking to them on the phone, according to a consumer alert notice issued by the Federal Trade Commission.

To further cover their scam, they can alter the caller ID so the recipient thinks the phone call is coming from a loved one’s number.

Authorities call these scams “grandparent scams,” where scammers contact a grandparent, impersonate a grandchild or other close relative, trick them into thinking a crisis has occurred, and ask for immediate financial assistance, WFAA reported.

Often, the scammers will create a reason why the grandparent should not call the grandchild’s parents — “Grandpa, I’m in trouble and I need money for bail. … Please don’t tell mom and dad. I’ll get in so much trouble,” warns the FTC.

The fake calls can also come in the form of a voicemail.

The FTC says scammers only need a short clip of a family member’s voice that can be pulled off social media to impersonate someone.

The more sophisticated technology means that scamming is on the rise, with consumers losing $2.6 billion to imposter scams in 2022, a $200 million increase from 2021, per the FTC.

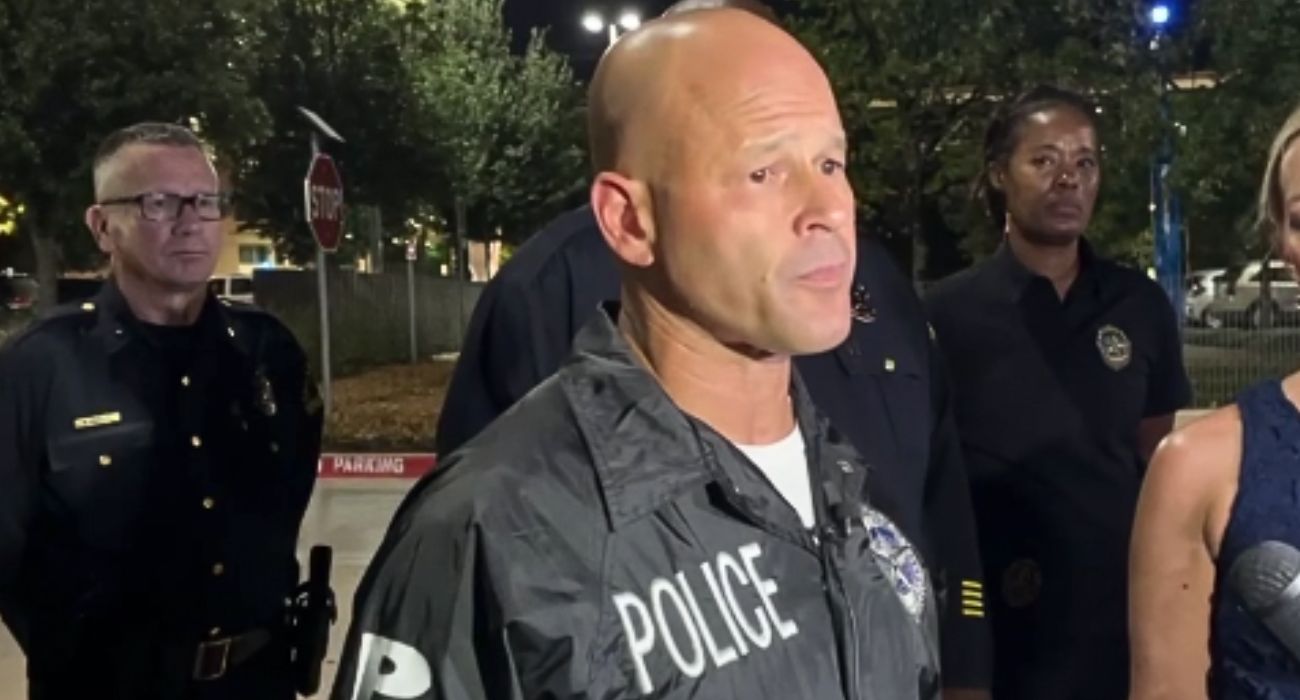

Crimes of this nature are growing more common in Dallas as well, with 550 incidents classified as false pretenses/swindle/confidence game logged year to date as of May 5, according to figures from the City of Dallas Open Data crime analytics dashboard. This marks just over a 4% increase compared to 2022, which saw an almost identical increase over 2021.

One Maryland man was recently taken for $38,000 after receiving a fake call impersonating his granddaughter, according to CBS News.

The Federal Communications Commission warns people to be extremely careful about responding to requests for personal identifying information.

“If someone calls you and asks for personal information, don’t give it out right away. Ask for their name and the reason for the call. If they claim to be from a company, ask for their phone number and extension, and hang up! Tell them you will be calling back and then call back from a verified phone number. Same with your friends and family. Hang up and call back using the contact information you have on file,” the Haverford Township Police Department in Pennsylvania suggested in a press release about AI cloning.

The Haverford Township police suggest creating a secret code word or phrase with family members to use in an emergency and to ask for the code before revealing any information. The Washington Post calls this an “AI safeword.”

The police force also suggests asking questions or discussing anecdotes only the real person would know.